Food Taste Similarity Prediction Based on Images and Human Judgments

Food Taste Similarity Triplets

Food Taste Similarity TripletsDescription

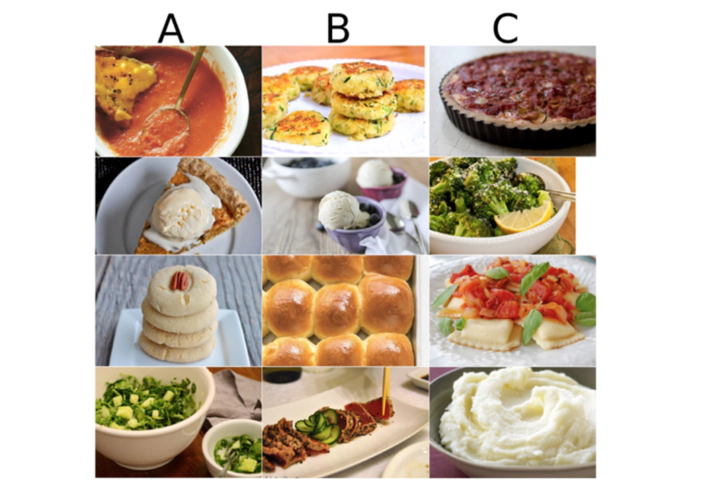

A dataset of images of 10,000 dishes, together with a set of triplets representing human annotations of food taste similarity is provided. The objective of the project is to predict for unseen triplets $(A, B, C)$, whether dish $A$ is more similar in taste to $B$ or $C$. In this project, image embeddings are learned through an autoencoder, where the loss is computed based on distances between the embeddings.