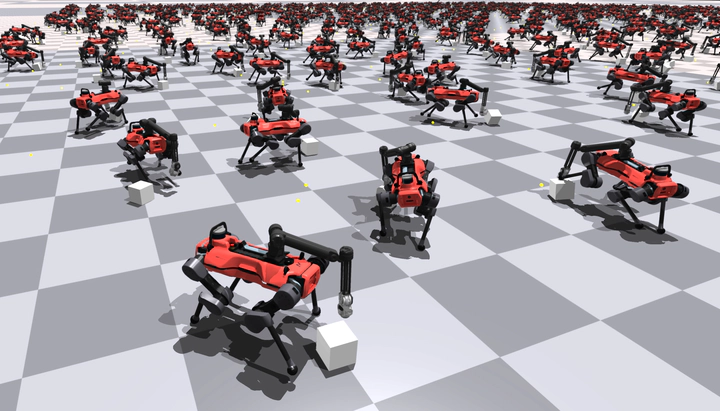

Object Manipulation via Hierarchical Reinforcement Learning Control

Box Pushing with ALMA D

Box Pushing with ALMA DDescription

Reinforcement learning offers a promising methodology for developing robust robotic skills but typically results in independent control of specific behaviors. The common method is to urge robots to learn a target skill in an elaborately designed training setting. To further improve legged maneuverability, it is reasonable to combine such individual learned skills to attain novel and complex movements. Building on the previous success in legged locomotion with deep reinforcement learning techniques, we develop a hierarchical control method that separates high-level planning from low-level task fulfillments. We demonstrate the effectiveness of our method with a mobile manipulation setting, where the robot is asked to push a box to an given location. The learned policy specifies end-effector position targets relative to the robot base frame while driving the base towards the target object.