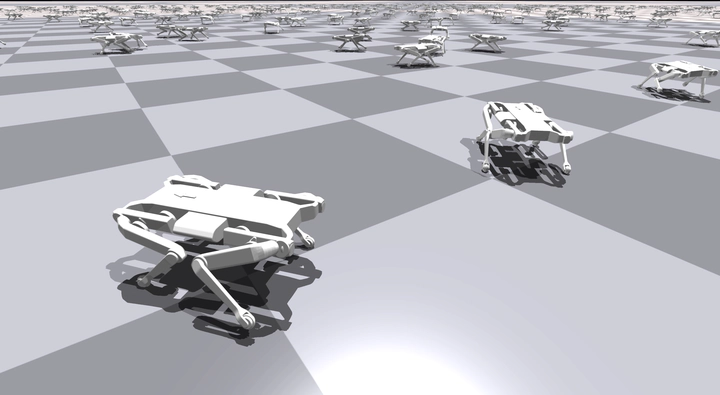

Legged Locomotion with Graph Neural Network Policies

Solo 12 with Structured Policies

Solo 12 with Structured PoliciesDescription

Generic reinforcement learning methods parameterize policies of agents by multi-layer perceptrons (MLPs) which take the concatenation of all observations from the environment as input for predicting actions. In this work, we apply NerveNet to explicitly model the structure of an agent, which naturally takes the form of a graph from the robot morphology. Serving as the agent’s policy network, NerveNet first propagates information over the structure of the agent and then predict actions for different parts of the agent. Concretely, we embed joint space observations in node features where nodes are connected through edges representing the robot legs. The base states constitute global features of the graph. At each time step, joint and global features are updated with the latest observation. Edge attributes are calculated and aggregated from features of the incident nodes and the global information, therefore, passing messages across the graph. Nodes are updated with these messages and predict joint position output again with the global attributes.